Agent or Workflow: How to Choose Architecture Without Hype

TL;DR: In production, the question is rarely “do we need AI?” It is “what control model does this task need?” If inputs are stable, quality criteria are formalized, and the error cost is high, workflow remains the default. If the task changes during execution and requires dynamic tool selection and plan adaptation, an agent is justified. In practice, hybrid wins most often: workflow as the skeleton, agent as a constrained executor in selected nodes.

There is a lot of noise around “agents”. Some teams move into complex orchestration too early. Others stay with rigid pipelines even when those pipelines already hurt development speed and output quality. Both extremes lead to the same result: rising cost, slower delivery, and weaker engineering clarity.

This article provides a practical decision framework for production systems: metrics, reliability, security, controlled cost, and clear responsibility boundaries between code, model, and human.

Scope and definitions

To avoid terminology drift, fix definitions first.

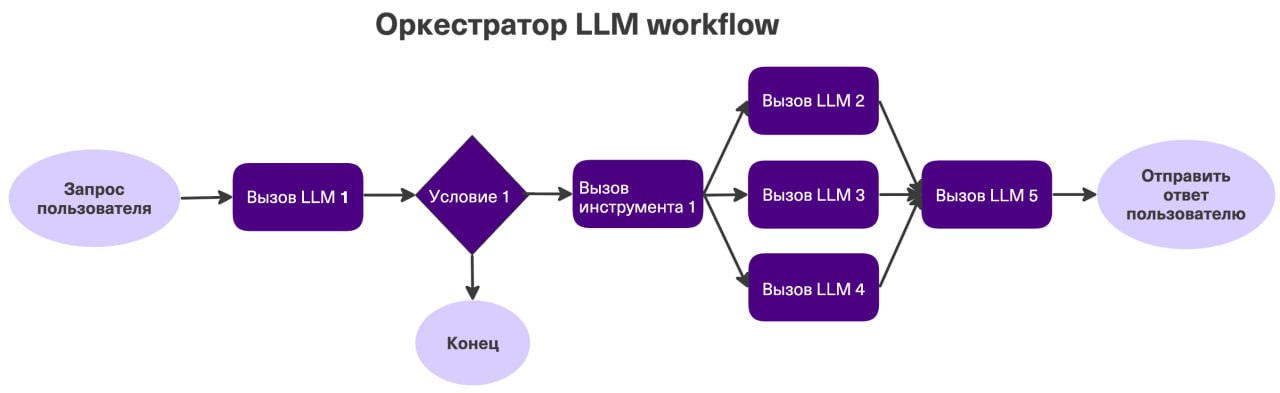

Workflow in this article means a deterministic graph of steps where transitions and branch conditions are defined in code. LLM can be one node, but the system does not delegate control over the execution plan itself.

Agent means an executor that can choose the next step within explicit constraints. It can decide which tool to call, when to request more context, and how to reach the goal across iterations.

Hybrid means workflow as the outer control layer with bounded agentic nodes in selected sections. This usually gives the best reliability vs adaptability balance.

Why architecture choice matters more than model choice

A team can switch models in a week. Architectural debt changes slower and costs more. If the control loop is wrong, replacing provider or prompt usually does not fix economics.

Core risks of choosing the wrong control model:

- overengineering where deterministic flow is enough;

- false confidence, when workflow simulates control but does not cover real input variance;

- blurred responsibility between orchestrator, prompts, and integrations;

- cost growth caused by extra LLM calls and repeated iterations.

A related production case around releases, security, and economics is covered in MLOps for a Support RAG Agent in 2026. This article extends that with an architecture selection framework.

Signals that workflow is the right baseline

Workflow usually wins when most conditions below are true:

- input is structured or can be normalized reliably;

- correctness can be formalized in rules and tests;

- error cost is high and behavior must stay predictable;

- each transition must be auditable;

- stable latency under SLO is required.

Typical task classes:

- schema-constrained extraction with validation;

- document classification by fixed taxonomy;

- reporting pipelines with strict output format;

- operational runbook flows with allowlisted actions.

Signals that agent is justified

Agent is justified when the task truly needs adaptive planning, not just better text in one node.

- input varies heavily and context is gathered on the fly;

- the system must choose between tools to complete the task;

- multi-step strategy is required and next steps depend on intermediate results;

- requirements change often and faster iteration is critical.

Typical task classes:

- research and analysis workflows with heterogeneous data sources;

- complex support triage with clarifications and branch logic;

- semi-automated engineering assistants working with multiple APIs.

Decision framework: 5 scoring axes

Use a simple score from 1 to 5 per axis, where 1 means “closer to workflow” and 5 means “closer to agent”.

| Axis | 1-2 (workflow) | 4-5 (agent) |

|---|---|---|

| Input variance | Stable input, clear schema | Heterogeneous input, changing schema |

| Output determinism | Strict format, hard rules | Multiple acceptable paths to goal |

| Tool complexity | 1-2 tools, fixed order | Many tools, dynamic selection needed |

| Error cost | High impact, strict control needed | Tolerable with human escalation |

| Change velocity | Stable requirements | Frequent requirement changes |

Simple interpretation:

- total

<= 12: workflow baseline; - total

13-18: hybrid architecture; - total

>= 19: agent-first architecture with strict guardrails.

This is not a law of nature. It is a practical alignment tool between engineering, product, and business. Calibrate thresholds using incident history, latency constraints, and observed cost_per_success.

Three practical architecture patterns

1) Deterministic workflow with LLM nodes

Input -> Validate -> Normalize -> LLM Task A -> Rule Check -> Output

| |

+--> Fallback Template -+The system remains controllable. LLM is constrained to a bounded segment, while reliability is enforced by code and rules.

2) Hybrid: workflow skeleton + bounded agent

Input -> Policy Gate -> Agent Node -> Tool Proxy -> Validator -> Output

| |

+--> Escalate --+This is the most common production pattern. The agent has local autonomy inside one node, not over the whole system.

3) Multi-agent graph

Goal -> Planner -> Specialist Agents -> Critic -> Aggregator -> OutputThis pattern is useful less often than expected. Use it only when metrics prove value and observability is mature enough.

A visual interface example with tool calling and agent control is available in the igorOS post.

Contract between orchestrator and tools

Most incidents in agentic systems are not caused by the model itself. They happen at tool boundaries. Minimum contract: strict input/output schema, versioning, idempotency key, timeout budget, predictable error codes.

Example of a minimal tool contract:

{

"tool_name": "create_ticket",

"version": "v1",

"input_schema": {

"type": "object",

"required": ["title", "severity", "service"],

"properties": {

"title": { "type": "string", "minLength": 8 },

"severity": { "type": "string", "enum": ["low", "medium", "high"] },

"service": { "type": "string" },

"idempotency_key": { "type": "string" }

}

},

"safety": {

"requires_human_approval": true,

"allowed_roles": ["sre", "l2_support"]

}

}If this contract does not exist, the system is not ready to scale regardless of prompt quality.

Evals: what to measure before and after release

In “agent vs workflow”, winners are chosen by measured outcomes, not team preferences.

Minimum offline eval set:

task_success_rateon target scenarios;tool_call_precisionandtool_call_recall;- routing error share;

- policy violation share;

- task economics (

cost_per_success).

Minimum online metrics:

p95 latencyandtimeout rate;human_escalation_rate;rollback_rateby release;incident_countby severity;user_acceptance_ratefor the business function.

Set SLO before debating “model quality”. This removes emotional discussion and forces better architecture decisions.

For another concrete production quality framework, see MLOps for Production ML: 7 Release Gates for Controlled Rollouts.

Observability for agentic loops

Without step-level traces, an agent quickly becomes a black box. You need one trace across the full task lifecycle: from inbound request to final tool effect.

Useful minimum event log:

request_id

session_id

planner_decision

selected_tool

tool_input_hash

tool_result_status

policy_check_status

human_approval_status

total_tokens

total_cost_usd

latency_msThis is enough to run incident analysis and build release gates on data, not intuition.

Security: where agent systems usually fail

When moving from workflow to agent, attack surface expands: dynamic tool selection creates more points where untrusted text can alter system behavior.

Baseline guardrails:

- strict tool allowlist, block undeclared calls;

- secret isolation, no direct model access to credential stores;

- policy check before every side-effect action;

- mandatory human approval for irreversible operations;

- filtering and labeling of untrusted context before use.

This aligns with OWASP Top 10 for LLM Applications and NIST AI RMF: Generative AI Profile.

Cost: choose with real economics, not request price

Compare workflow vs agent using cost per successfully completed task in the target business function, not request cost alone.

Working formula:

Cost per useful task =

(LLM_cost + Tool_cost + Infra_cost + Human_review_cost) / Success_countIf agent lowers manual effort but sharply increases escalations and retries, total economics can still be worse than workflow. Cost must always be interpreted together with quality and time.

Practical rollout plan: workflow to agent

Stage 0. Baseline workflow

Start with deterministic flow. Build reference quality and cost baseline.

Stage 1. Single agent node

Introduce agent in one high-variance segment. Keep the rest in workflow control.

Stage 2. Release gates and policy

Add mandatory pre-release gates: eval threshold, budget threshold, policy compliance. Any failed threshold blocks promotion.

Stage 3. Scale by business function

After stable operation in one flow, extend to adjacent functions. Each function gets its own eval set and SLO profile.

This sequence usually reduces risk of a large architectural swing, where system complexity grows faster than team observability and support capacity.

Common anti-patterns

- Agent with no responsibility boundary. Autonomy is undefined, human control is undefined.

- Prompt instead of contract. Tool inputs and outputs are not formalized.

- Demo-driven decisions. Pilot looks good, but production metrics are weak.

- No budget controls. Cost is reviewed only after escalation.

- Model-orchestrator role confusion. Model decides what code should decide.

Open-source references used in this article

Useful repository for architecture discipline:

It works well as an engineering checklist for tool calls, state, context, and responsibility boundaries in agentic systems.

For an implementation-oriented bridge between API contracts and tool interfaces:

This repository is relevant for “workflow skeleton + bounded agent” setups with explicit contract layers.

Summary

In production, “agent vs workflow” is not an ideology debate. It is an engineering decision with measurable inputs: variance, risk, cost, and required change velocity.

Default position is usually workflow. Agentic behavior is added where data proves it increases the target business metric.

When architecture choice is encoded in contracts, evals, and policy, the system stays controllable as complexity grows.